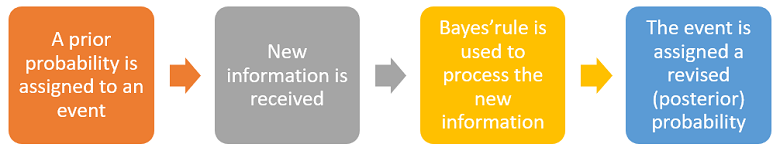

The prior probability is the probability assigned to an event before the arrival of some information that makes it necessary to revise the assigned probability.

The revision of the prior is carried out using Bayes' rule.

The new probability assigned to the event after the revision is called posterior probability.

The arrival of new information is processed using

Bayes'

rule:![[eq1]](/images/prior-probability__1.png)

The prior probability

![]() is assigned to the event

is assigned to the event

before receiving the information that the event

has happened.

After receiving this information, the prior probability is updated and the

posterior probability

![]() is computed.

is computed.

In order to perform the calculation, the conditional probability

![]() needs to be known.

needs to be known.

Suppose that an individual is extracted at random from a population consisting of two ethnic groups, ABC and XYZ.

We know that:

30% of individuals belonging to group ABC have incomes below the poverty line;

the corresponding proportion for the population as a whole is 20%;

40% of the population is made of individuals belonging to group ABC.

If we extract an individual whose income is below the poverty line, what is the probability that she belongs to group ABC?

This conditional probability can be computed with Bayes' rule.

The quantities involved in the computation

are![[eq5]](/images/prior-probability__7.png)

The prior probability is

![]() ,

the probability of belonging to group ABC.

,

the probability of belonging to group ABC.

The posterior probability

![]() can be computed thanks to Bayes'

rule:

can be computed thanks to Bayes'

rule:![[eq8]](/images/prior-probability__10.png)

Prior probability is a fundamental concept in Bayesian statistics.

In Bayesian inference, the following conceptual framework is used to analyze observed data and make inferences:

there are several probability distributions that could have generated the data;

each possible distribution is assigned a prior probability that reflects the statistician's subjective beliefs and knowledge accumulated before observing the data;

the observed data is used to update the prior, by using Bayes' rule;

as a result of the update, all the possible data-generating distributions are assigned posterior probabilities.

In Bayesian statistics, it often happens that the set of probability distributions that could have generated the data is indexed by a parameter.

The parameter is seen as a random variable and it is assigned a subjective probability distribution, which is called the prior distribution.

The prior distribution is then updated, using the observed data, and a posterior distribution is obtained.

Example Suppose that we observe a number of independent realizations of a Bernoulli random variable (i.e., a variable that is equal to 1 if a certain experiment succeeds and 0 otherwise). In this case, the set of data-generating distributions is the set of all Bernoulli distributions, which are indexed by a single parameter (the probability of success of the experiment). The distribution assigned to the parameter before observing the outcomes of the experiments is the prior distribution (usually a Beta distribution).

To better understand the meaning of a prior in Bayesian statistics, you can read the following examples:

Bayesian inference on the parameters of a normal distribution;

Bayesian inference on the parameters of a linear regression model.

The prior probability is also often called unconditional probability, as opposed to the posterior probability, which is a conditional probability.

You can find a more exhaustive explanation of the concept of prior probability in the lecture entitled Bayes' rule.

Previous entry: Precision matrix

Next entry: Probability density function

Please cite as:

Taboga, Marco (2021). "Prior probability", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/glossary/prior-probability.

Most of the learning materials found on this website are now available in a traditional textbook format.