A property that makes the normal distribution very tractable from an analytical viewpoint is its closure under linear combinations:

the linear combination of two independent random variables having a normal distribution also has a normal distribution.

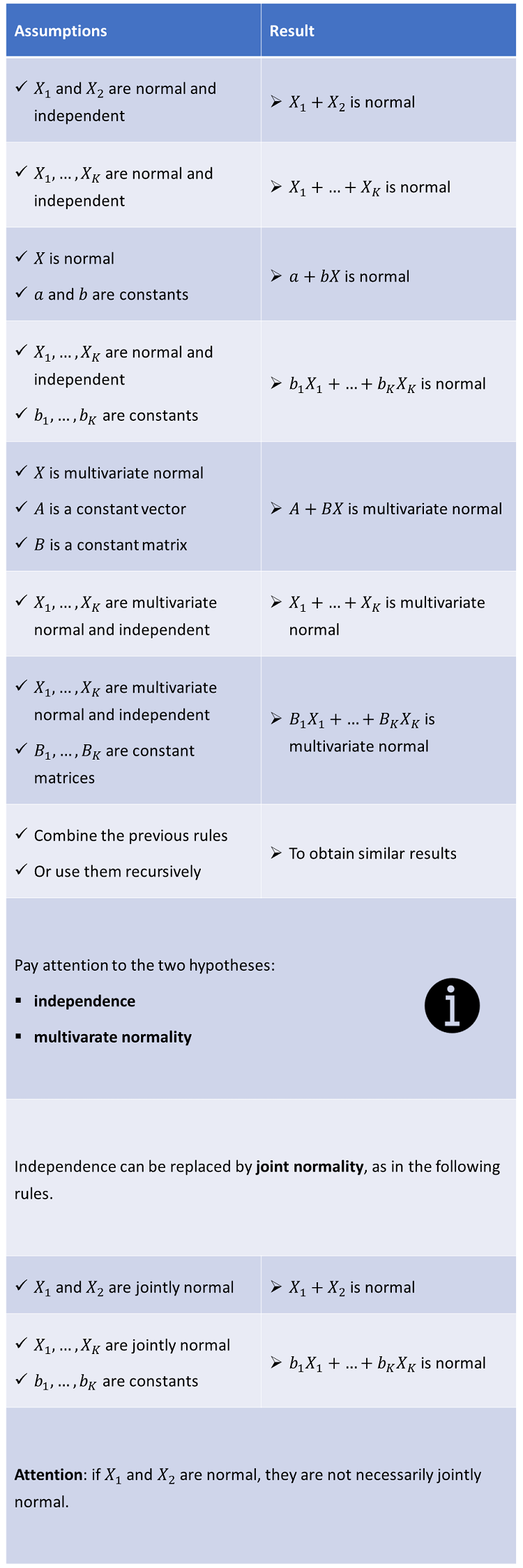

The following sections present a generalization of this elementary property and then discuss some special cases, summarized in the following infographic.

Table of contents

Linear transformation of a multivariate normal random vector

A linear transformation of a multivariate normal random vector also has a multivariate normal distribution.

Proposition

Let

be a

multivariate normal random vector with mean

and covariance matrix

.

Let

be an

real vector and

an

full-rank real matrix. Then, the

random vector

defined

by

has

a multivariate normal distribution with

mean

and

covariance

matrix

This is proved using the formula for the

joint moment generating function of the

linear transformation of a random vector. The joint moment generating

function of

is

Therefore,

the joint moment generating function of

is

![[eq5]](/images/normal-distribution-linear-combinations__17.png) which

is the moment generating function of a multivariate normal distribution with

mean

which

is the moment generating function of a multivariate normal distribution with

mean

and covariance matrix

.

Note that

needs to be positive definite in order to be the covariance matrix of a proper

multivariate normal distribution, but this is implied by the assumption that

is full-rank. Therefore,

has a multivariate normal distribution with mean

and covariance matrix

,

because two random vectors have the same distribution when they have the same

joint moment generating function.

The following examples present some important special cases of the above property.

The sum of two independent normal random variables has a normal distribution.

Proposition

Let

be a normal random variable with mean

and variance

.

Let

be a random variable, independent of

,

having a normal distribution with mean

and variance

.

Then, the random

variable

has

a normal distribution with mean

and

variance

First of all, we need to use the fact that

mutually independent normal random variables

are jointly normal: the

random vector

defined

as

has

a multivariate normal distribution with mean

and

covariance matrix

We

can

write

where

Therefore,

according to the above proposition on linear transformations,

has a normal distribution with

mean

and

variance

![]()

The sum of more than two independent normal random variables also has a normal distribution.

Proposition

Let

be

mutually independent normal random variables, having means

and variances

.

Then, the random variable

has

a normal distribution with mean

and

variance

This can be obtained, either generalizing

the proof of the proposition in Example 1, or using the proposition in Example

1 recursively (starting from the first two components of

,

then adding the third one and so on).

The properties illustrated in the previous two examples can be further

generalized to linear combinations of

mutually independent normal random variables.

Example

Let

be

mutually independent normal random variables, having means

and variances

.

Let

be

constants. Then, the random variable

defined

as

has

a normal distribution with mean

and

variance

First of all, we need to use the fact that

mutually independent normal random variables are jointly normal: the

random vector

defined

as

has

a multivariate normal distribution with mean

and

covariance matrix

![[eq31]](/images/normal-distribution-linear-combinations__68.png) We

can

write

We

can

writewhere

Therefore,

according to the above proposition on linear transformations,

has a (multivariate) normal distribution with

mean

and

variance

![[eq35]](/images/normal-distribution-linear-combinations__73.png)

Here is a special case of the above proposition on normal random vectors, for

the case in which

has dimension

(i.e., it is a random variable).

Proposition

Let

be a normal random variable with mean

and variance

.

Let

and

be two constants (with

).

Then the random variable

defined

by

has

a normal distribution with

mean

and

variance

This is just a special case

()

of the above proposition on linear transformations of multivariate normal

vectors.

The property illustrated in Example 3 can be generalized to linear combinations of mutually independent normal random vectors.

Proposition

Let

be

mutually independent

normal random vectors, having means

and covariance matrices

.

Let

be

real

full-rank matrices. Then, the

random vector

defined

as

has

a normal distribution with mean

and

covariance matrix

This is a consequence of the fact that

mutually independent normal random vectors are jointly normal: the

random vector

defined

as

has

a multivariate normal distribution with mean

and

covariance matrix

![[eq48]](/images/normal-distribution-linear-combinations__104.png) Therefore,

we can apply the above proposition on linear transformations to the vector

Therefore,

we can apply the above proposition on linear transformations to the vector

.

Below you can find some exercises with explained solutions.

Let

be

a

multivariate normal random vector with mean

and

covariance

matrix

Find the distribution of the random variable

defined

as

We can

writewhere

Being

a linear transformation of a multivariate normal random vector,

is also multivariate normal. Actually, it is univariate normal, because it is

a scalar. Its mean

is

and

its variance

is

![[eq56]](/images/normal-distribution-linear-combinations__116.png)

Let

,

...,

be

mutually independent standard normal random variables. Let

be a constant.

Find the distribution of the random variable

defined

as

Being a linear combination of mutually

independent normal random variables,

has a normal distribution with

mean

![[eq59]](/images/normal-distribution-linear-combinations__124.png) and

variance

and

variance![[eq60]](/images/normal-distribution-linear-combinations__125.png)

Please cite as:

Taboga, Marco (2021). "Linear combinations of normal random variables", Lectures on probability theory and mathematical statistics. Kindle Direct Publishing. Online appendix. https://www.statlect.com/probability-distributions/normal-distribution-linear-combinations.

Most of the learning materials found on this website are now available in a traditional textbook format.